RAM and SSD Cache Choices for OpenZFS

Some important hardware characteristics and differences, as well as the operating mechanisms used in OpenZFS.

Storage options with RAM and SSDs in OpenZFS systems

Those are just as important as selecting proper disks for your OpenZFS build. But let’s first discuss some important hardware differences and operation mechanisms used in OpenZFS that can affect those hardware choices.

Error Correction Code Memory vs Regular Memory (ECC vs non-ECC RAM)

There are two main variants of RAM on the market:

- ECC Memory

- Non-ECC Memory

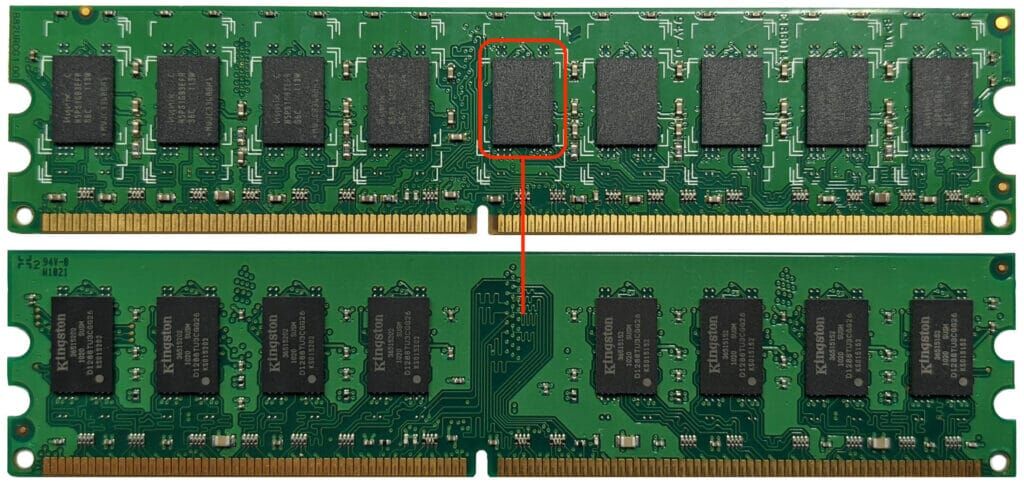

ECC is a type of RAM which uses Error Correction Code mechanisms to detect and correct single-bit data errors on-the-fly. ECC memory modules are physically different from non-ECC because they contain extra chips that are used to contain and process the detection/correction code. Those extra chips, and their manufacturing overhead, is also one reason why ECC memory is more expensive.

An ECC RAM module (top) vs a non-ECC one. Notice the extra chip on the ECC one?

What is a single-bit error?

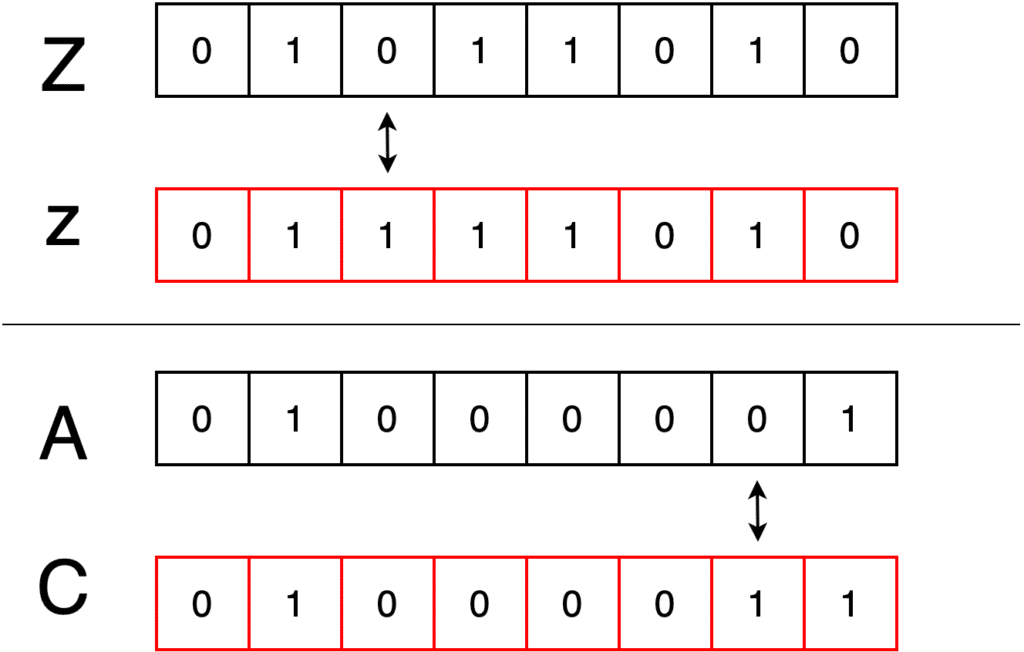

A single-bit errors is basically a flipped bit that changes from 0 to 1, or vice versa, as it passes through the system. The cause of corruption could be anything from a bad disk (or other component) connection to a cosmic ray hitting your system at the wrong moment.

Although the one single bit error would not usually wreak havoc within your pool but it can sometimes cause significant issues by corrupting an important file (think databases) or indirectly corrupt your data by corrupting a critical system instruction or causing a complete system crash during pool writes. Also, the accumulation of those errors can lead to a significantly corrupt pool – if not detected early.

An example of a single bit change that results in the uppercase letter (Z) being converted to lowercase (z), while another change results in the letter (A) being converted to (C).

How can ECC memory help protect my data?

ECC memory protects from bit-flips by using on-chip ECC logic/algorithms to compare the data during processing (AKA in real-time).

The idea is simple but genius: since all data is converted to binary code (1s and 0s) the RAM checks every data byte and calculates the sum of all the ones (1) within each byte – which could only be either an even or an odd number. The RAM then uses the extra physical RAM chip to store that sum as an extra Parity bit (control bit). When the time comes to read the data from RAM (think: a connected client is requesting a file from the OpenZFS cache which lives in the RAM) the ECC chip will compare the stored sum to that of the data being read. If there is a difference (one bit-flip) it will quickly correct the data using the stored value.

ECC RAM can also detect multiple errors within a single word but it won’t be able to correct more than one. If errors are multiple and uncorrectable, ECC memory will stop processing corrupt bits and will halt the system. For OpenZFS this mechanism virtually eliminates the chance that corrupt data in the RAM passing to the disks drives and corrupting your pools or causing errors in the stored files.

Do I have to use ECC memory with OpenZFS?

No, but you better do.

ZFS has the following built-in mechanisms to detect and repair data corruptions:

- ZFS does End-to-end checksums on your data. Those checksums help detect data corruption during read operations.

- ZFS automatically attempt to repair corrupt disk blocks using the redundant data available within its RAIDZ arrays.

- It runs periodic scrubs to detect bit-rot (usually happens on degraded disk media)

- It also runs checksum checks on replication streams to make sure data was not corrupted in-flight.

So, ZFS itself was not designed to rely on ECC RAM. It can consume non-ECC RAM just as well. Same thing applies to OpenZFS, as a fork of ZFS. But the fact that we are talking about production servers that are intended to host and protect the integrity of your company or customers’ mission-critical data makes it clear that you should understand the threat non-ECC memory poses against your data and operations continuity.

If you think the non-ECC memory you purchased is reliable and the possibility of data corruption is low enough that you should not care about using ECC memory then please take a look at this study which is the result of more than two years of work by a team from the University of Toronto and Google. They examined the memory performance of a whole Google datacentre under controlled operating conditions.

The end result was that it takes approximately between 14 to 40 hours until the first bit-flip happens in a 1 Gigabit of processed data in a DRAM chip.

On the other hand, ZFS does not have a post-corruption filesystem recovery toolset. Unlike EXT3/4, XFS, and even NTFS, which have their own filesystem scan and repair tools, ZFS corrupt pools cannot be rescued. Once a ZFS pool is corrupted the data is gone. Most of the time.

Is ECC memory faster than non-ECC?

No. Due to the additional processing the memory chips do, ECC memory usually has a slightly higher latency than non-ECC. This difference is so minute it is negligible and will not dramatically affect the server performance in any way.

What are the requirements for using ECC memory in my TrueNAS build?

ECC support on the CPU and on the mainboard. Refer to your hardware specifications sheets to make sure it can handle ECC RAM. Also make sure you purchase the exact type of ECC memory your hardware supports. For instance, some mainboards cannot handle buffered ECC modules.

Registered vs Unregistered Memory

When shopping for RAM modules, we usually have to choose between registered and unregistered sticks – also referred to as buffered and unbuffered RAM.

Registered (buffered) RAM has an extra Register chip acting as an interface between the actual memory chips and the system’s memory controller – which mostly resides on the CPU. This decreases the load is on the memory controller (within the CPU) and allows the system to support an increased total amount of RAM. This is why, when you check mainboard specifications, you will usually see a statement along the lines of “This mainboard supports 64GB ECC UDIMM and up to 128GB ECC RDIMM”. Here is an example from Supermicro’s website.

Registered memory costs a bit more than the unregistered version, due to the extra circuitry required to implement the register chip.

If your application requires a humongous amount of RAM, then RDIMMs is the way to go. Keep in mind that most consumer mainboards, and some server mainboards too, does not support buffered memory. So, just make sure the mainboard you opt for supports it.

Tip: Just like any other electronic or electro-mechanical component within a computer system, ECC RAM can still fail. If your system has ECC memory and exhibits frequent halts then check your RAM, disks and cabling/connectors. It might be one of your memory modules has gone bad, a disk is failing or a cable is not securely connected. Early detection of such issues can help protect your data.

One of the popular AIO hardware-testing options out there is the Hiren’s BootCD PE. This is a bootable CD containing a select collection of free and/or Open-Source inspection tools – including Memtest86+.

OpenZFS Caching and RAM Usage

OpenZFS has two different types of caching: read cache and write cache – and it uses the system memory for both its read and write cache, but in different ways and intensities.

Read Caching

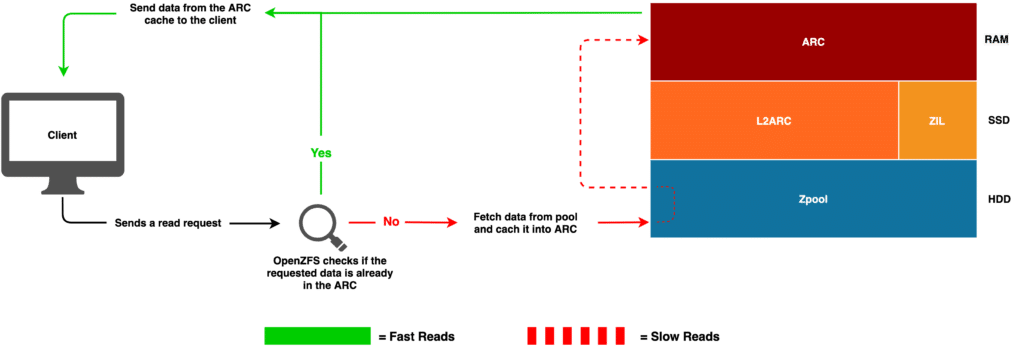

The OpenZFS read cache has two levels:

- ARC (Adaptive Replacement Cache)

- L2ARC (Level2 Adaptive Replacement Cache)

What is ARC?

ARC is the first level of OpenZFS read cache. It consists of hot-data that has been read from the pool and stored in the RAM.

ARC lives in the RAM and is used by all data pools in the storage system. Basically, the files you request from your OpenZFS/TrueNAS system will be retrieved from the underlying pool disk array and copied into the system RAM before being sent to the client over ethernet.

This speeds up subsequent reads of the same files since no disk-reads will be performed. The system looks up the file in the ARC cache and if the file exists, it is a Hit.

This translates to: for multi-client environments and servers serving large numbers of files, the more RAM your system has the better.

ZFS does a smart check to see if data already exists in the ARC cache.

How much RAM does OpenZFS use for the ARC cache?

OpenZFS, and TrueNAS, will use approximately up to 90% of available system memory for its ARC cache (see the screenshot in the 1st article). This is possible with TrueNAS because the underlying FreeBSD OS is quite RAM efficient.

So, what is L2ARC?

It is short for Level 2 ARC and it consists of data that has been in the ARC and is still wanted, but is considered to be less-demanded by the clients.

Physically L2ARC usually consists of one or more fast SSD devices that we add to the system.

Once ARC has used up all available RAM, OpenZFS will regularly flush some of the cached data from ARC into the L2ARC devices to free some RAM for new read data. This process is called evection.

This happens only when and if an L2ARC exists. And L2ARC exists when you choose to add it to you pools.

Is L2ARC also shared?

Unlike ARC, L2ARC is not shared by all pools within your system. It is pool-specific because you attach it to a pool, during or after its creation, and it gets used exclusively by that pool.

Remember that L2ARC is an option, while ARC is a must. You can choose to add a couple of NVMe disks to your system and use them as L2ARC, but you cannot eliminate ARC as it is a core component of OpenZFS.

What to use as L2ARC?

L2ARC can be a fast SSD or NVMe device. Those are still faster than HDDs, but will be slower than your RAM.

To improve L2ARC performance we implement its devices as striped devices (RAID0). Striping greatly improves the performance of the system’s L2ARC.

SSD durability is still important here, but not as much as when used for storage and SLOG. When choosing an SSD for L2ARC look for a device with:

- The highest random reads performance

- PFP

- A reasonable TDWPD rating

Can I use the same SSD to add L2ARC to multiple pools?

Technically you can create multiple partitions on the same SSD disk and dedicate each one as the L2ARC of a specific pool. But this is a highly discouraged technique.

The performance of your SSD disk is finite. It has a limited number of IOPS, and those IOPS are shared between all reads and writes happening on that specific disk. If you overwhelm the disk with I/O requests from different sources with different types of reads/writes the system’s performance will tank down.

It is best to use a dedicated set of SSDs for each pool. Better performance and much less hassle.

What happens if I lose my L2ARC device?

Pretty much nothing. Both ARC and L2ARC are duplicate data residing on a faster medium (RAM and SSD) while the original data is still there on the pool’s HDDs. Aside from a possible negative performance impact, nothing is there to worry about.

When to add L2ARC to my system?

When adding more RAM to your system is prohibitively difficult and/or expensive.

Remember those two facts:

- RAM is faster than anything else we have. It is still faster than the fastest SSD on the market today.

- All the data in the L2ARC is referenced in a table that is kept in the RAM. This translates to: the larger your L2ARC is, the more RAM it consumes.

So, by using a large L2ARC device you end up eating more of your system RAM, which will decrease the amount of data that could be cached on that fast medium.

Write Caching

OpenZFS write cache is a bit intricate. ZFS uses a Transaction Groups mechanism, where it caches the writes first in the system RAM, organises them, then flushes them down to the pool in patches (groups) in short intervals, where they get written to a hidden slice within the pool.

That hidden slice is called ZFS Intention Log (ZIL for short) and it is striped across the pool drives – so, it does not reside on a single disk.

This RAM-to-disk buffering helps HDD-based system performance by allowing the spinning disks enough time to save the data. It also improves data integrity because, in the event of a system crash or a power failure, partial writes will not be completed since the data that was in the RAM will evaporate.

OpenZFS also uses a Transaction Delay mechanism – also known as Write Throttle. When ZFS detects that the pool’s underlying disks are incapable of handling the fast rate of incoming writes, ZFS will further throttle down the incoming writes in order to allow the disks slightly more time to finish writing the data to their platters.

In OpenZFS, as with many other different filesystems and data access protocols, there are two main types of writes:

- Synchronous Writes

- Asynchronous Writes

And both come with their own set of benefits and disadvantages.

Synchronous Writes

In a synchronous write (syn-write for short) the client sends the data to the storage system and the system waits until data has been safely written to the storage pool then the system will inform the client that the data has been saved. Only then the client can proceed with sending the next patch of data. So, the upside is a better data integrity, and the downside is a slower performance – because the client must wait for a confirmation from the storage system for every successful write before sending any more data.

Asynchronous Writes

In a asynchronous write (async-write for short) the client sends the data to the storage system and the system saves the data in the RAM and immediately informs the client that the data has been saved. The client can then proceed with sending the next patch of data. In the background, the system will slowly flush the data from RAM to the disks at an accommodating speed.

Because RAM is much faster than disks, async-writes perform better since data needs only to be stored in the RAM before acknowledging the client it has been saved.

But the downside is that RAM is volatile and, in the event of a system crash or a power failure, the transactional data groups that were still in the RAM will be lost. The client will think the data was safely written, when it was not, and you might end up with corrupt, partially-written, files.

So, what is SLOG?

As a middle ground between relying solely on RAM and async-writes, and spending time waiting on slow spinning disks to finish writing the transactional data to their plates ZFS can use a fast non-volatile (SSD) device to flush the write transactional data from the RAM to it, then tell the client that the data has been saved. In this scenario, even if the system crashes or a power interruption takes place, the data that has been written to the SSD will still be there after the system recovers.

That intermediate device is called the Separate Log (SLOG for short).

What devices to use for SLOG?

It is recommended to use redundant fast SSDs with PFP and high TDWPD durability. Mirroring your SLOG is critically important – to protect against data loss in the event of a single SLOG device failure during writes.

How big should my SLOG be?

Between 64GB and 128GB is enough for most systems. What matters here is redundancy, speed and durability, not size.

Can I use the same SSD for both L2ARC and SLOG?

Again, if you do not care about performance or data security, sure – why not.

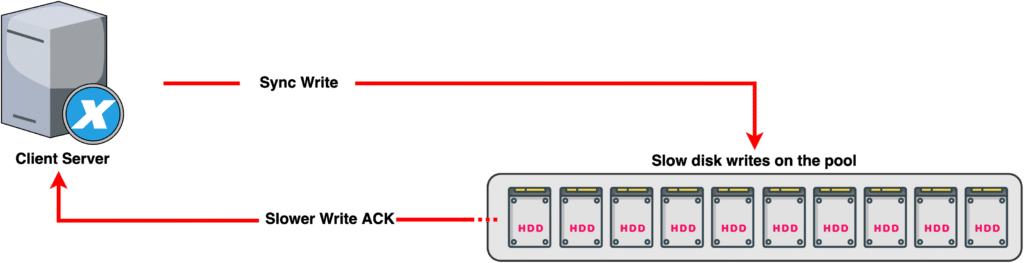

Finally, here is how it looks when your OpenZFS system does not have a SLOG:

In this example, the data is sent from the Citrix virtualisation server to the pool. The Citrix, requires the writes to be synced. The system will honor the sync request and will only send a write acknowledgement (ACK) when the data has been safely written to the underlying disks.

This means that ACKs can take longer, especially if you have slow drives or if one of your disks is giving up the ghost.

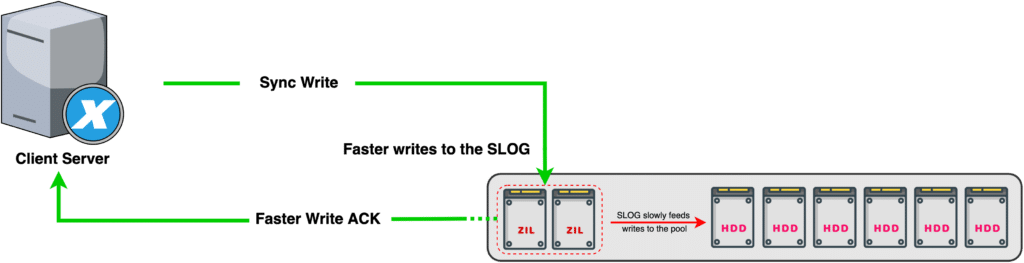

On the other hand, in a pool with a SLOG, the ACKs could be sent back much faster though.

In this setup, the system can confirm the write and send the ACK once the data has been written to the SLOG set. The SLOG can then slowly feed the data to the rotating disks at an accommodating speed.

In a nutshell

ECC memory can make the difference between a healthy and a corrupt dataset. If your data is valuable then the price difference should not matter and you must use ECC. Also, do not expect performance gains from ECC.

OpenZFS has two levels of read cache, ARC and L2ARC. ARC lives in the system RAM and usually consumes most of it – while L2ARC is an optional fast SSD that you can add to the system.

Adding more RAM to your system is better than any L2ARC device you may add. L2ARC is not free, it adds pressure on the system RAM because the contents of L2ARC must be referenced in the RAM. So, add L2ARC when adding more RAM is way too difficult to incorporate into your system.

ZIL is OpenZFS’s version of write cache. It is part of every ZFS pool, unless you opt to use a SLOG – which is a set of separate fast SSDs used to host the ZIL. SLOG devices for ZIL can improve synchronous writes performance. Make sure you opt for a fast SSD with a high durability rating. Mirroring your SLOG devices is a must for any serious application.

TL;DR

RAM and SSDs on OpenZFS

- ECC RAM is physically different from non-ECC RAM. Same applies to buffered and unbuffered RAM.

- ECC RAM can detect and correct single-bit errors – thus helps protect the integrity of your data.

- ECC RAM can also detect multiple errors within a word, but it cannot correct more than one error.

- Registered (buffered/ RDIMM) ECC RAM allows for the installation of more memory in your server. Just make sure your mainboard supports it.

- ECC RAM is not necessarily faster than non-ECC, but the latency difference is negligible when looking at the data security benefits.

- ECC RAM is not a must for OpenZFS, but it is highly recommended if the data integrity and server stability matters to you.

- Data corruption in-memory happens at a rate that corelates to the amount of the data that is being processed by the computer system.

- If your OpenZFS/TrueNAS build is going to be used for storing critical data then use ECC.

- To use ECC RAM in your build you need a CPU, and possibly a mainboard chipset, that supports ECC.

- ECC or not, memory modules do fail. If you detect errors, immediately inspect your RAM.

- ZFS has a Read Cache which is divided into two levels called ARC and L2ARC.

- ARC is a core component of OpenZFS. L2ARC is optional.

- ARC lives in your system RAM, while L2ARC could be a fast NVMe disk.

- Stripe your L2ARC devices to speed up its performance.

- Adding more RAM to your system is better than installing L2ARC devices.

- ZIL is OpenZFS’s write intention log. It is part of every OpenZFS pool.

- A SLOG is a fast SSD dedicated to host the ZIL portion of your pool.

- SLOG can help improve your synchronous writes by quickly accepting ZIL writes then slowly feeding them to your HDDs.